In apps that allow users to post public content — for instance in forums, social networks and blogging platforms - there is always a risk that inappropriate content could get published. In this post, we’ll look at ways you can automatically moderate offensive content in your Firebase app using Cloud Functions.

The most commonly used strategy to moderate content is “reactive moderation”. Typically, you’ll add a link allowing users to report inappropriate content so that you can manually review and take down the content that does comply with your house rules. You can better prevent offensive content from being publicly visible and complement your reactive moderation by adding automated moderation mechanisms. Let’s see how you can easily add automatic checks for offensive content in text and photos published by users on your Firebase apps using Cloud Functions.

Content moderation tpyes

We’ll perform two types of automatic content moderation:

Text moderation

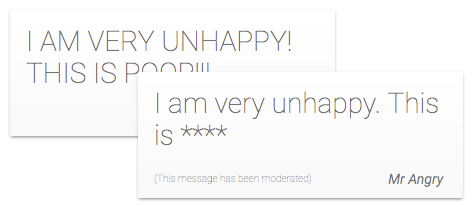

Text moderation where we’ll remove swearwords and all shouting (e.g. “SHOUTING!!!”).

Image moderation

Image moderation where we’ll blur images that contain either adult or violent content.

Automatic moderation, by nature, needs to be performed in a trusted environment (i.e. not on the client), so Cloud Functions for Firebase is a great, natural fit for this. Two functions will be needed to perform the two types of moderation.

Text Moderation

The text moderation will be performed by a Firebase Realtime Database triggered function named moderator. When a user adds a new comment or post to the Realtime Database, a function is triggered which uses the bad-words npm package to remove swear words.We’ll then use the capitalize-sentence npm package to fix the case of messages that contain too many uppercase letters (which typically meaning users are shouting). The final step will be to write back the moderated message to the Realtime Database.

Data Structure

To illustrate this we’ll use a simple data structure that represents a list of messages that have been written by users of a chat room. These are made of an object with a text attribute that gets added to the /messages list:

/functions-project-12345

/messages

/key-123456

text: "This is my first message!"

/key-123457

text: "IN THIS MESSAGE I AM SHOUTING!!!"

Once the function has run on the newly added messages, we’ll add two attributes: sanitized which is true when message has been verified by our moderation function and moderated which is true if it was detected that the message contained offensive content and was modified. For instance, after the function runs on the two sample messages above we should get:

/functions-project-12345

/messages

/key-123456

text: "This is my first message!",

sanitized: true,

moderated: false

/key-123457

text: "In this message I am shouting."

sanitized: true,

moderated: true

Cloud Function

Our moderator function will be triggered every time there is a write to one of the messages. We set this up by using the functions.database().path('/messages/{messageId}').onWrite(...) trigger rule. We’ll moderate the message and write back the moderated message into the Realtime Database:

exports.moderator = functions.database.ref('/messages/{messageId}')

.onWrite(event => {

const message = event.data.val();

if (message && !message.sanitized) {

// Retrieved the message values.

console.log('Retrieved message content: ', message);

// Run moderation checks on on the message and moderate if needed.

const moderatedMessage = moderateMessage(message.text);

// Update the Firebase DB with checked message.

console.log('Message has been moderated. Saving to DB: ', moderatedMessage);

return event.data.adminRef.update({

text: moderatedMessage,

sanitized: true,

moderated: message.text !== moderatedMessage

});

}

});

Checking for language

In the moderateMessage function, we’ll first check if the user is shouting and if so fix the case of the sentence and then remove all bad words using the bad-words package filter.

function moderateMessage(message) {

// Re-capitalize if the user is Shouting.

if (isShouting(message)) {

console.log('User is shouting. Fixing sentence case...');

message = stopShouting(message);

}

// Moderate if the user uses SwearWords.

if (containsSwearwords(message)) {

console.log('User is swearing. moderating...');

message = moderateSwearwords(message);

}

return message;

}

// Returns true if the string contains swearwords.

function containsSwearwords(message) {

return message !== badWordsFilter.clean(message);

}

// Hide all swearwords. e.g: Crap => ****.

function moderateSwearwords(message) {

return badWordsFilter.clean(message);

}

// Detect if the current message is shouting. i.e. there are too many Uppercase

// characters or exclamation points.

function isShouting(message) {

return message.replace(/[^A-Z]/g, '').length > message.length / 2 || message.replace(/[^!]/g, '').length >= 3;

}

// Correctly capitalize the string as a sentence (e.g. uppercase after dots)

// and remove exclamation points.

function stopShouting(message) {

return capitalizeSentence(message.toLowerCase()).replace(/!+/g, '.');

}

Note: the bad-words package uses the badwords-list package’s list of swear words which only contains around 400 of these. As you know the imagination of the user out there has no limit, so this is not an exhaustive list and you might want to extend the bad words dictionary.

Image Moderation

To moderate images we’ll set up a blurOffensiveImages function that will be triggered every time a file is uploaded to Cloud Storage. We set this up by using the functions.cloud.storage().onChange(...) trigger rule. We’ll check if the image contains violent or adult content using the Google Cloud Vision API.

Using the Cloud Vision API

The Cloud Vision API has a feature that specifically allows to detect inappropriate content in images. Then if the image is inappropriate we’ll blur the image:

exports.blurOffensiveImages = functions.storage.object().onChange(event => {

const object = event.data;

const file = gcs.bucket(object.bucket).file(object.name);

// Exit if this is a move or deletion event.

if (object.resourceState === 'not_exists') {

return console.log('This is a deletion event.');

}

// Check the image content using the Cloud Vision API.

return vision.detectSafeSearch(file).then(data => {

const safeSearch = data[0];

console.log('SafeSearch results on image', safeSearch);

if (safeSearch.adult || safeSearch.violence) {

return blurImage(object.name, object.bucket, object.metadata);

}

});

});

Blurring images in Cloud Storage

To blur the image stored in Cloud Storage, we’ll first download it locally on the Cloud Functions instance, blur the image with ImageMagick, which is installed by default on all instances, then re-upload the image to Cloud Storage:

function blurImage(filePath, bucketName, metadata) {

const filePathSplit = filePath.split('/');

filePathSplit.pop();

const fileDir = filePathSplit.join('/');

const tempLocalDir = `${LOCAL_TMP_FOLDER}${fileDir}`;

const tempLocalFile = `${LOCAL_TMP_FOLDER}${filePath}`;

const bucket = gcs.bucket(bucketName);

// Create the temp directory where the storage file will be downloaded.

return mkdirp(tempLocalDir).then(() => {

console.log('Temporary directory has been created', tempLocalDir);

// Download file from bucket.

return bucket.file(filePath).download({

destination: tempLocalFile

});

}).then(() => {

console.log('The file has been downloaded to', tempLocalFile);

// Blur the image using ImageMagick.

return exec(`convert ${tempLocalFile} -channel RGBA -blur 0x8 ${tempLocalFile}`);

}).then(() => {

console.log('Blurred image created at', tempLocalFile);

// Uploading the Blurred image.

return bucket.upload(tempLocalFile, {

destination: filePath,

metadata: {metadata: metadata} // Keeping custom metadata.

});

}).then(() => {

console.log('Blurred image uploaded to Storage at', filePath);

});

}

Check out our OSS examples

Cloud Functions for Firebase can be a great tool to reactively and automatically apply moderation rules. Feel free to have a look at our open source samples for text moderation and image moderation.