Building interactive experiences with generative AI is now easier than ever with the new extensions: Build Chatbot with the Gemini API and Multimodal Tasks with the Gemini API

Generative AI enables exciting new use cases for your apps. Firebase Extensions make this even easier by providing pre-packaged solutions that allow you to add new features to your apps with just a few steps. Now you can easily use the Gemini API to access Google’s most capable and general model yet to add functionality to your apps.

In this post, we’ll show you how to build a chat utility to help users find their favorite conference sessions, and a tool to auto generate accessible alt text for an image.

Use Generative AI Without Being a Generative AI Expert

Firebase Extensions are pre-packaged solutions that allow you to add new features to your apps with only a few lines of code. Both the Build Chatbot with the Gemini API and Multimodal Tasks with the Gemini API Firebase Extensions allow you to implement AI use cases by just writing documents to Firestore.

You don’t need to be an AI expert; generative models like the Gemini model make developing generative AI applications more approachable than traditional natural language processing (NLP) models have done before. You just need a prompt and perhaps a few examples of what the output should look like.

Create a Conference Assistant

Imagine you’re a conference organizer and want to create a website with speaker and session information. Typically, conference websites have a page that shows the agenda of the conference, and a list of all the speakers and their talks. Users can then click through each session to see which talks interest them based on the titles, and read the bios of each speaker to get information on their background. This way of presenting the content of the conference makes it hard for attendees to figure out which talks are really interesting for them, and which speakers they might want to connect with.

A better way for attendees to find interesting talks and speakers might be to provide a personalized conference assistant that suggests talks based on the attendees’ interests.

So instead of having to scroll through a list of dozens and dozens of conference talks, they could just ask “I am a mobile developer who is hoping to learn something about backend development. Which sessions would you recommend to get a good introduction to this?”

To give you an idea how this would work, we’ve created a demo of such an assistant. The conference assistant is based on the agenda of F3, a conference about Flutter and Firebase that took place in Prague. An easy way to make sure the model responds based on a specific set of data is to include the relevant data in the prompt. In our case, we downloaded the list of talks from https://f3.events and formatted it as a CSV. Then, we wrote a prompt that provides enough guidance for the model to answer questions based just on the data we presented in the context.

Answer questions about this conference only.

In your response, cite a conference talk, who

is giving the talk, and some information about

the talk. Answer questions based on the following

CSV: Speakers,Title,Description "Nathan Yim,

Miguel Ramos",Customer Advisory Council,

"Francis Ma, Michael Thomsen",Flutter &

Firebase Keynote,Hear from the product leads how

the Firebase and Flutter developer communities

{REMAINDER OMITTED FOR BREVITY}...Prompt engineering can sometimes feel like an art form. While this prompt may work well for our specific use case, it may not work for yours. You should consider following the Design chat prompts guide for more information on making a well crafted prompt to retrieve information.

In the next step, we installed the Build Chatbot with the Gemini API Firebase Extension and configured it to use our prompt. The client can communicate with the extension by creating a new Firestore document that includes the user’s question, e.g. “I would like to learn more about backend development. Which talks should I attend?”

Here is the response:

I would like to learn more about backend development. Which talks should I attend?

Output:As you would expect from a chatbot, the Build Chatbot with the Gemini API extension retains context between messages, so the user can ask follow-up questions. To pass the user’s question to the chatbot, all we need to do is create a new document in Firestore:

If I could only choose one of those talks, which one should I see?

Output:With just a few lines of prompt based on the agenda of the conference, we were able to create an engaging experience for attendees of the conference, helping them to find suitable sessions more easily (and in less time) than before.

Auto-generate alt text for images

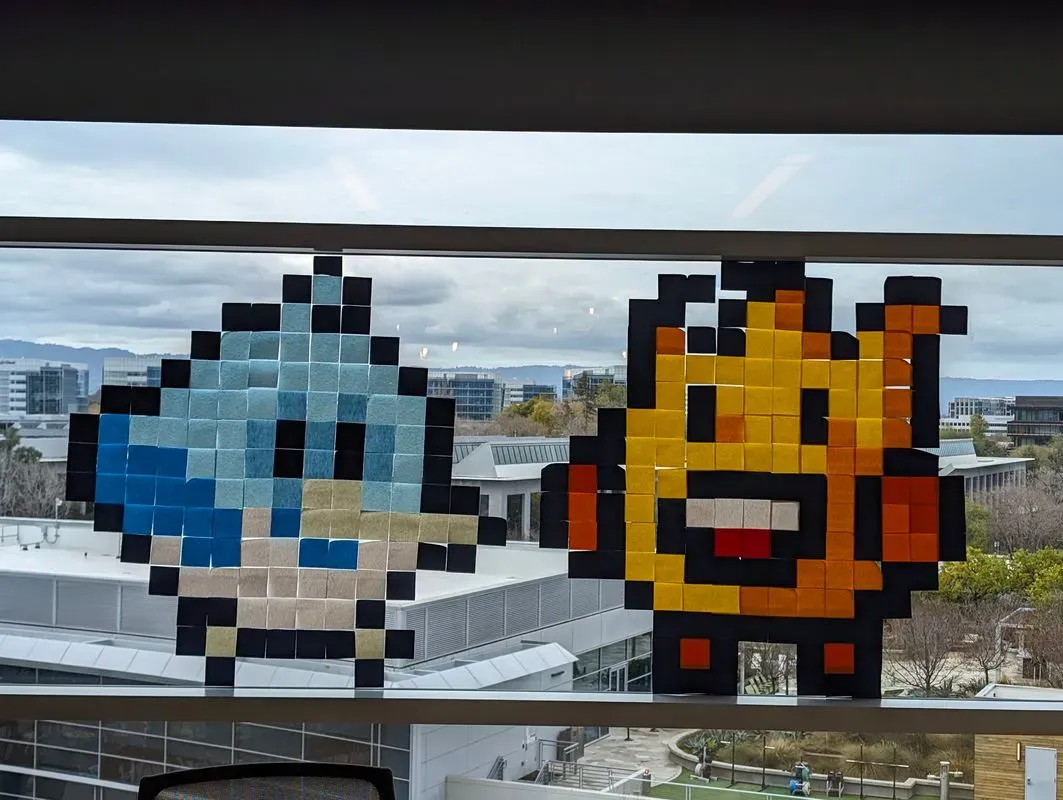

Attendees are enjoying the conference thanks to the great recommendations the conference assistant gave them. They take lots of pictures of the venue and of the talks. Let’s assume that, as part of the conference website, you also implemented an image gallery for attendees to upload their photos.

Wouldn’t it be great if you could generate alt text for all the images that people uploaded?

With the Multimodal Tasks with the Gemini API Firebase Extension, it’s easy to implement this. Gemini is a multimodal model that is capable of reasoning about text and image at the same time.

As an example, we have a demo of an alt text generator that follows the official guidance from the W3.org site for alt text.

Here is an example of a snippet of the prompt below:

Write alt tags for img views in a web browser

based on the following guidance:

Choosing appropriate text alternatives:

Imagine that you’re reading the web page aloud

over the phone to someone who needs to

understand the page. This should help you

decide what (if any) information or function

the images have. If they appear to have no

informative value and aren’t links or buttons,

it’s probably safe to treat them as decorative.

{OMITTED THE REMAINDER FOR BREVITY}...Whenever a user uploads a new photo, we first store it in a Cloud Storage bucket. Then, we send the image data along with the prompt to the Gemini API which generates the alt text for the image for us. We then store the alt text and the storage reference in a Firestore document. The conference image gallery then uses this information to display all photos including the computed alt text.

Here is the alt text the model generated for the above image of Dash and Sparky:

gs://myproject.appspot.com/myfolder/dashAndSparky.png

Output:Safety and security built in

All of the official Firebase Extensions are built with safety and security in mind. For the use cases we discussed in this blog post in particular, we benefit from the following advantages of using Firebase:

- Google Cloud Secret Manager is used to store our API key in a central and secure location. This avoids having to include the API key in any client-side code, which could risk accidentally leaking secrets when checking in code to our source code repository.

- Firestore Security Rules allow us to limit which users have access to the data we store in Firestore (and other Firebase services). Users only have access to their own data.

- Firebase App Check helps us to ensure only trusted clients can access our backend, thereby reducing attack vectors for our application.

What’s next?

A great way to experiment with prompts for your particular use case is Google AI Studio - it provides the kind of interactive experience that makes it easy to iterate on an idea and refine it until it meets your needs.

Once you have crafted a prompt that delivers the results you’re looking for, head over to Extensions.dev to bring those AI experiments into your apps. You’ll be surprised how fast and easy it is to add AI use-cases to your application. For more inspiration on building AI experiences into your applications using Firebase Extensions, check out the following video where Khanh and Nohe built an AI-powered application that can auto-generate descriptions for videos.