Your AI is smart, but is it current? Large language models (LLMs) are incredibly powerful, but their knowledge is fundamentally frozen in time, limited to the data they were trained on. So if you’re building mobile and web apps with features that rely on current events - like today’s market trends or a new product release - their inability to access this real-time data becomes a major roadblock.

Today, that changes. We are thrilled to announce support for Grounding with Google Search in the Firebase AI Logic client-side SDKs, the #1 most requested feature the Firebase AI Libraries UserVoice. You can now connect the Gemini model to real-time web content from your mobile and web apps.

⚠️ The problem with static knowledge

Think of a standard LLM as a brilliant encyclopedia—vastly knowledgeable, but printed last year. It can’t help with questions about anything that has happened since. This “knowledge cutoff” has been a major barrier for developers looking to build truly dynamic and reliable AI applications. For tasks that depend on real-time information, from customer support to financial analysis, relying on outdated data is simply not an option.

💡 The solution: connecting your AI to the now

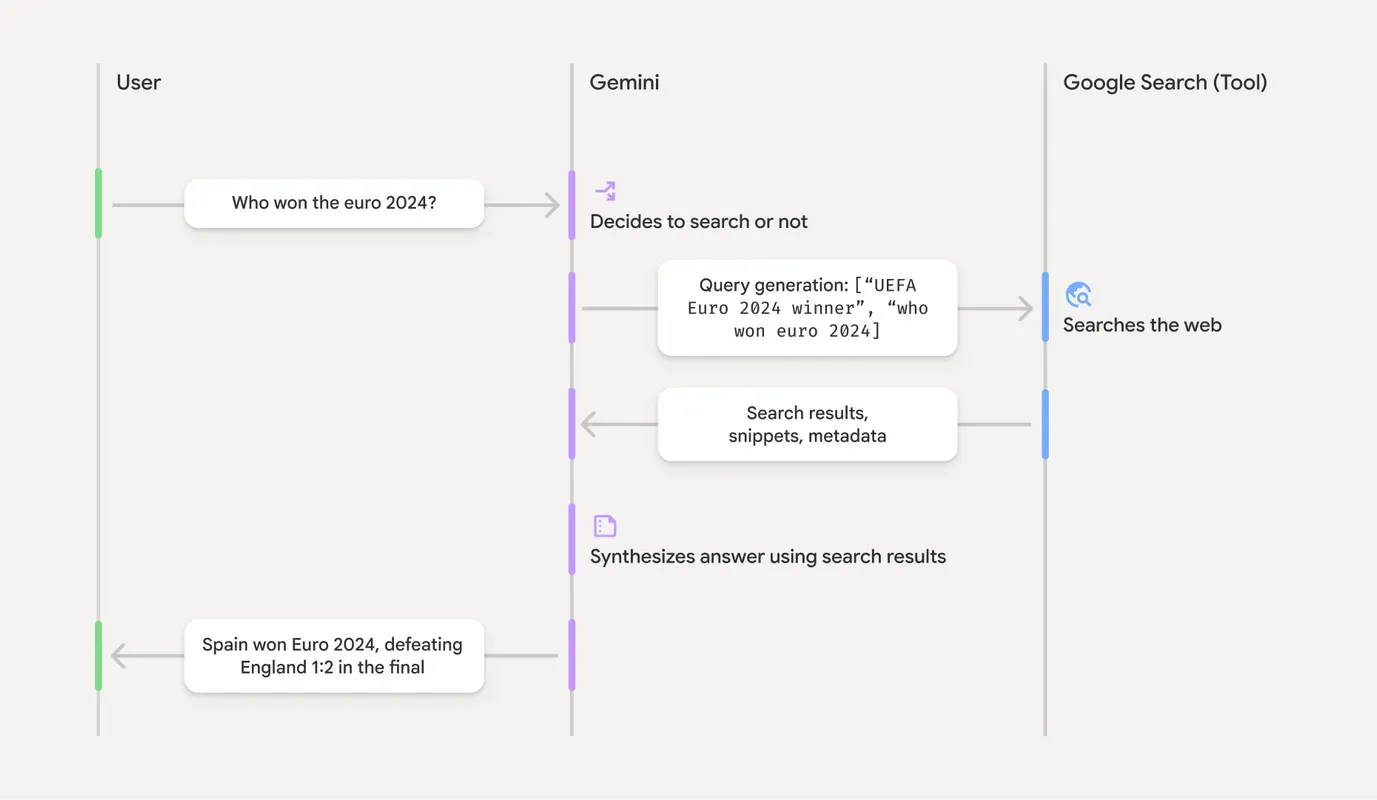

Grounding with Google Search solves this problem by allowing Gemini to perform a real-time search before it generates an answer. This process ensures the model’s response is “grounded” in the most current and relevant facts available on the web. It combines the reasoning capabilities of the LLM with the factual, up-to-the-second data of Google Search.

This means you can now build applications that:

- Provide hyper-current customer support by accessing the latest product documentation or service status updates.

- Generate timely content that incorporates breaking news, recent sports scores, or emerging trends.

- Create dynamic travel and event planners that can react to last-minute changes or cancellations.

🚀 Get started today

To get started, make sure you upgrade to the latest version of the Firebase AI Logic client-side SDKs (Grounding with Google Search was introduced in the following Firebase SDK versions: Android BoM 34.0.0, Flutter 2.3.0, iOS 12.0.0, Web 12.0.0, Unity 12.11.0).

Pass googleSearch as a tool to the generative model:

const model = getGenerativeModel(ai, {

model: "gemini-2.5-flash",

// Enable Grounding with Google Search

tools: [{ googleSearch: {} }],

});

const result = await model.generateContent("Who won the euro 2024?");Now whenever the model decides to search the web, you’ll get a groundingMetadata object in the response:

{

"candidates": [

{

"content": {

"parts": [

{

"text": "Spain won Euro 2024, defeating England 2-1 in the final. This victory marks Spain's record fourth European Championship title."

}

],

"role": "model"

},

"groundingMetadata": {

"webSearchQueries": [

"UEFA Euro 2024 winner",

"who won euro 2024"

],

"searchEntryPoint": {

"renderedContent": "<!-- HTML and CSS for the search widget -->"

},

"groundingChunks": [

{"web": {"uri": "https://vertexaisearch.cloud.google.com.....", "title": "aljazeera.com"}},

{"web": {"uri": "https://vertexaisearch.cloud.google.com.....", "title": "uefa.com"}}

],

"groundingSupports": [

{

"segment": {"startIndex": 0, "endIndex": 85, "text": "Spain won Euro 2024, defeatin..."},

"groundingChunkIndices": [0]

},

{

"segment": {"startIndex": 86, "endIndex": 210, "text": "This victory marks Spain's..."},

"groundingChunkIndices": [0, 1]

}

]

}

}

]

}To comply with the Grounding with Google Search usage requirements, you should display the Google Search suggestions in your app’s UI:

function GroundedResult({ result }) {

// Get the model's text response and the HTML for the search

const responseText = result.response.text();

const renderedSearchSuggestions =

result.response.candidates?.[0]?.groundingMetadata?.searchEntryPoint

?.renderedContent;

// Display the result only if search suggestions are available

if (renderedSearchSuggestions) {

return (

<div>

<p>{responseText}</p>

<div dangerouslySetInnerHTML={{ __html: renderedSearchSuggestions }} />

</div>

);

} else {

return (

<div>

<p>Result was not grounded in search</p>

</div>

);

}

}To learn more, check out the Firebase AI Logic documentation and the pricing pages (Gemini Developer API | Vertex AI Gemini API).

We can’t wait to see what you build!