Over the past few months, we’ve been working on bringing more power and flexibility to AI-powered applications. Our goal is to provide the best tools for building amazing, full-stack experiences - and thanks to your feedback, we’ve just rolled out some exciting new features.

Let’s dive in and see what’s new!

Unique AI experiences for your users

The AI landscape has so many features that can give your users amazing and unique experiences. And we’ve launched several new capabilities that make it easier than ever to build them directly into your apps using Firebase AI Logic.

Build natural, human-like interactions

With the Gemini Live API, you can provide your users with the experience of natural, human-like voice conversations, with the ability to interrupt the model’s responses using text or voice commands. And now, we’ve expanded our integration to include a no-cost option through the Gemini Developer API. It’s now supported on our Web, Flutter, Unity, and Android SDKs. iOS support is coming soon.

// Initialize the Gemini Developer API backend service

const ai = getAI(firebaseApp, { backend: new GoogleAIBackend() });

// Create a LiveGenerativeModel instance with the flash-live model

const model = getLiveGenerativeModel(ai, {

model: "gemini-live-2.5-flash-preview",

// Configure the model to respond with audio

generationConfig: {

responseModalities: [ResponseModality.AUDIO],

},

});

const session = await model.connect();

// Start the audio conversation

const audioConversationController = await startAudioConversation(session);

// ... Later, to stop the audio conversation

await audioConversationController.stop()Edit and generate images

For specialized tasks where image control is critical, you can now edit and generate images client-side using Imagen models and Firebase AI Logic SDKs (for Android and Flutter). With Imagen’s auto-detection capabilities, all you need to provide is an original or reference image along with a text prompt of what you want changed. Imagen does the rest – from inserting or removing objects, changing aspect ratios, replacing backgrounds, even generating new images based on a reference image.

val model = Firebase.ai.imagenModel(

model = "imagen-3.0-capability-001"

)

// Replace existing parts of images.

val editedImage = model.inpaintImage(

sourceImage,

"outer space, with multiple constellations visible",

ImagenBackgroundMask(),

ImagenEditingConfig(ImagenEditMode.INPAINT_INSERTION)

)

// Add new content to the borders of existing images.

val editedImage = model.outpaintImage(

sourceImage,

Dimensions(1024, 1024)

)

// Do a style transfer (and more!) using the full editing API.

val editedImage = model.editImage(

listOf(

ImagenStyleReference(styleImage, 1, "cartoon style")),

"A cat flying through outer space, in a cartoon style[1]",

ImagenEditingConfig(

editSteps = 50

)

)And if you’re looking for a more conversational experience editing and generating images – try out Nano Banana with Firebase AI Logic SDKs!

Offer no-cost and offline capabilities when running model locally

In a joint effort with the Chrome team, we provide hybrid on-device inference for web apps. Accessing the hybrid on-device experience is now even easier since we’ve integrated it directly into our main Firebase JS SDK. The library automatically checks for Gemini Nano’s availability on the user’s browser and (based on your set preferences) intelligently switches between executing the prompt on the device or on cloud-hosted models.

More control and observability of your AI features

Your users’ experience is crucial to your app’s success, but so is your developer experience. We’ve launched several new capabilities that give you more control and observability of your AI features.

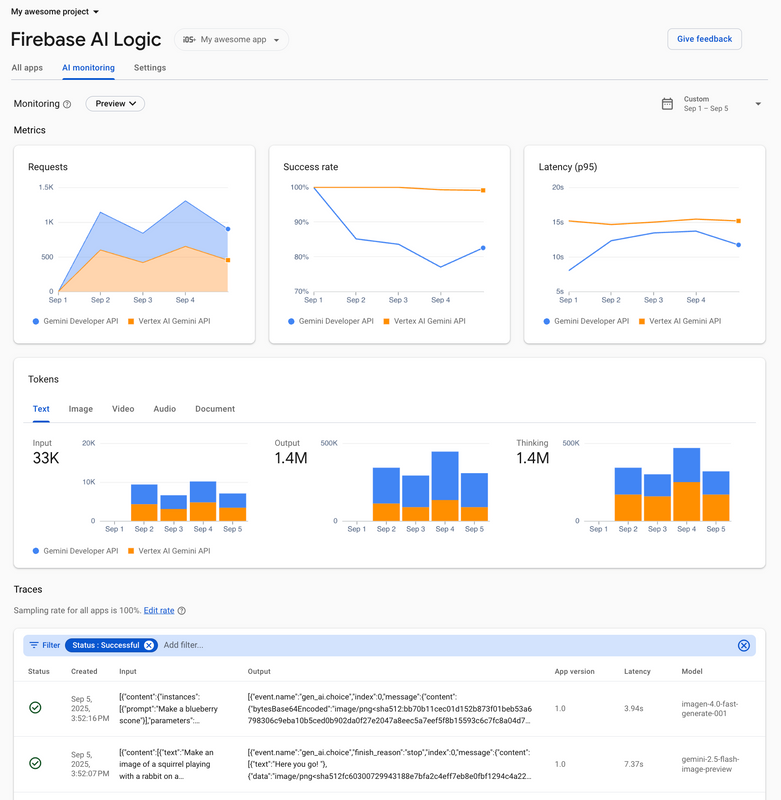

Debug and optimize using AI monitoring

Following our launch of AI monitoring for calls to the Vertex AI Gemini API, we’ve expanded support to the Gemini Developer API. This gives you free-tier access to powerful tools that will help you debug and optimize your AI features. AI monitoring in the Firebase console helps you understand metrics, like requests, latency, errors, and token usage to gain comprehensive visibility into your requests to Gemini models.

Get the most out of thinking models

All the latest Gemini 2.5 models are “thinking” models, which significantly improves their reasoning and multi-step planning abilities. Firebase AI Logic supports several configurations and options for thinking models. For example, with thinking budgets, you can control how much the model is allowed to “think”. This is particularly important in balancing tradeoffs of latency, cost, and handling complex generations. And by examining thought summaries, it’s easier to get insights into the model’s internal reasoning process to help debug and refine your prompts.

// Define the thinking configuration

val generationConfig = generationConfig {

thinkingConfig {

thinkingBudget = 1024

includeThoughts = true

}

}

// Create the model instance with the defined config.

val model = Firebase.ai(GenerativeBackend.googleAI())

.generativeModel("gemini-2.5-flash", generationConfig)

// Generate content and print the results.

val response = model.generateContent("Calculate 10!")

response.apply {

usageMetadata?.let { println("Thoughts Token Count: ${it.thoughtsTokenCount}") }

thoughtSummary?.let { println("Thought Summary: $it") }

text?.let { println("Answer: $it") }

}

Prepare for upcoming enhanced protection

Firebase AI Logic now supports the usage of limited-use tokens with Firebase App Check. Currently, you can configure the App Check token lifespan from 30 minutes to 7 days, These limited-use tokens reduce the configurable token lifespan to a shorter 5-minutes, giving you an extra layer of protection.

We highly recommend enabling Limited Use Tokens now. This will prepare your users for an upcoming feature that restricts usage to one-time tokens, ensuring they are on app versions that support this functionality when it becomes available.

val ai = Firebase.ai(

backend = GenerativeBackend.googleAI(),

useLimitedUseAppCheckTokens = true

)Your feedback in action: incredible growth and what’s next

Looking ahead, we’re already working on the next wave of features to provide an even more differentiated developer experience. We’re focused on improving the coding experience with easier-to-use APIs, and improving the security and privacy of your prompt (server prompt templates).

We’ve been absolutely blown away by the community’s response to Firebase AI Logic. Your adoption and feedback are what drive us to keep improving, so please don’t hesitate to reach out and let us know what you think.

We’re incredibly excited about the future of AI-powered applications, and we can’t wait to see what you build with these new tools.

Happy building!