The Vertex AI Prompt Optimizer uses your ground truth data to automatically tune your prompts, improving your AI workflows to deliver reproducible, high-accuracy model outputs every time for repeatable tasks like generating YouTube descriptions.

Spending a lot of time iterating on prompts with mixed results? That happens to a lot of us. We spend a large amount of time trying to optimize our prompts for the outputs we want and still aren’t able to get to the right spot. For tasks that we want to repeat multiple times, we can use a shortcut to get to a better prompt. This is the Vertex AI Prompt Optimizer. This lets you optimize your prompts to get better results by iterating on your prompts and then running an evaluation on the outputs assessing their quality to see if it has improved. Read on in this blog to see how you can get more accurate output from optimizing your prompts.

Using the prompt optimizer for data driven optimization

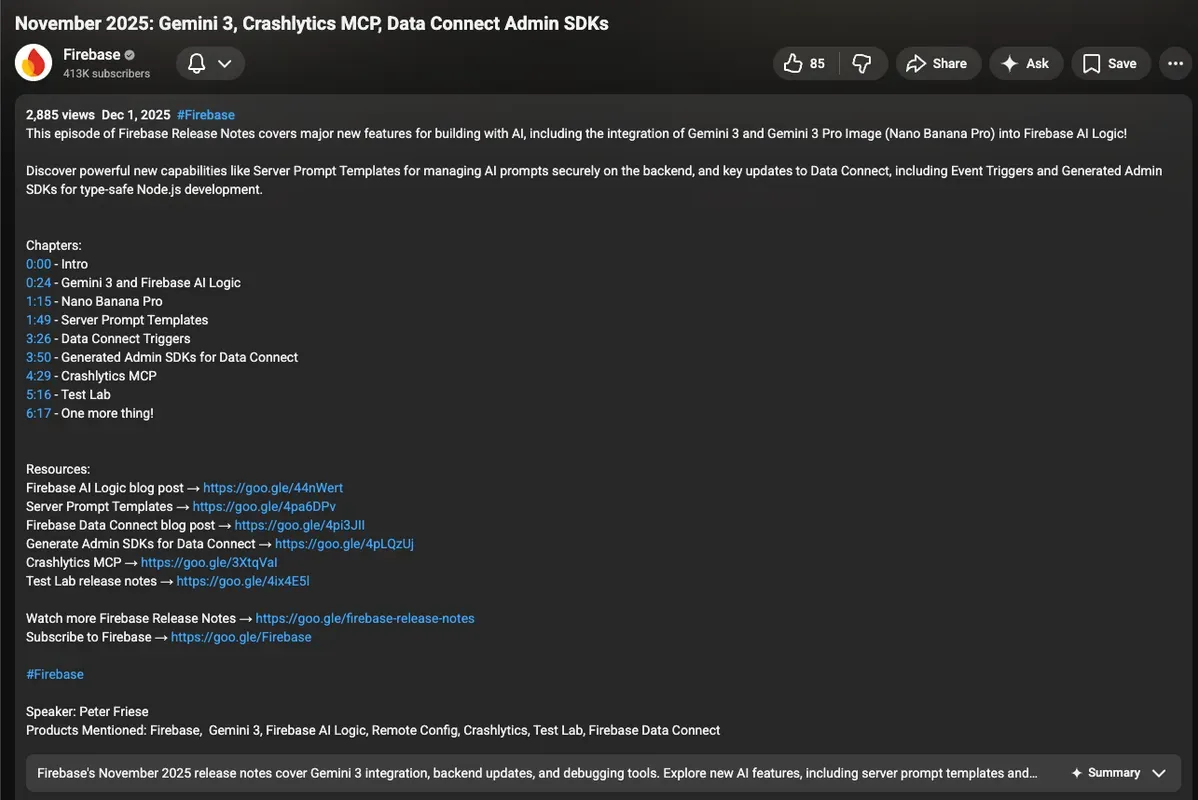

Here at Firebase, we have a lot of scripts for our Firebase YouTube channel. Every month we release a scripted Firebase Release Notes and a Firebase Deep Dive episode. For each episode we traditionally had a marketing person write the description based upon the script. To help alleviate the amount of time our marketing person would spend writing the script, I wanted to see if I could get AI to output an equivalent description where we may need a brief review just to make sure it’s aligned. Since we have the scripts and the descriptions for each video, I exported the scripts to a markdown and put them in a Google Sheet in a column titled “script”. For the YouTube descriptions, I did the same, but put them in a column called “target” since that is what the data driven prompt optimizer expects for the output in a prompt template. I then export this Google Sheet to a CSV format and then upload it to a Google Cloud Storage Bucket.

Setting up the data driven prompt optimizer

Setting up the data and prompts

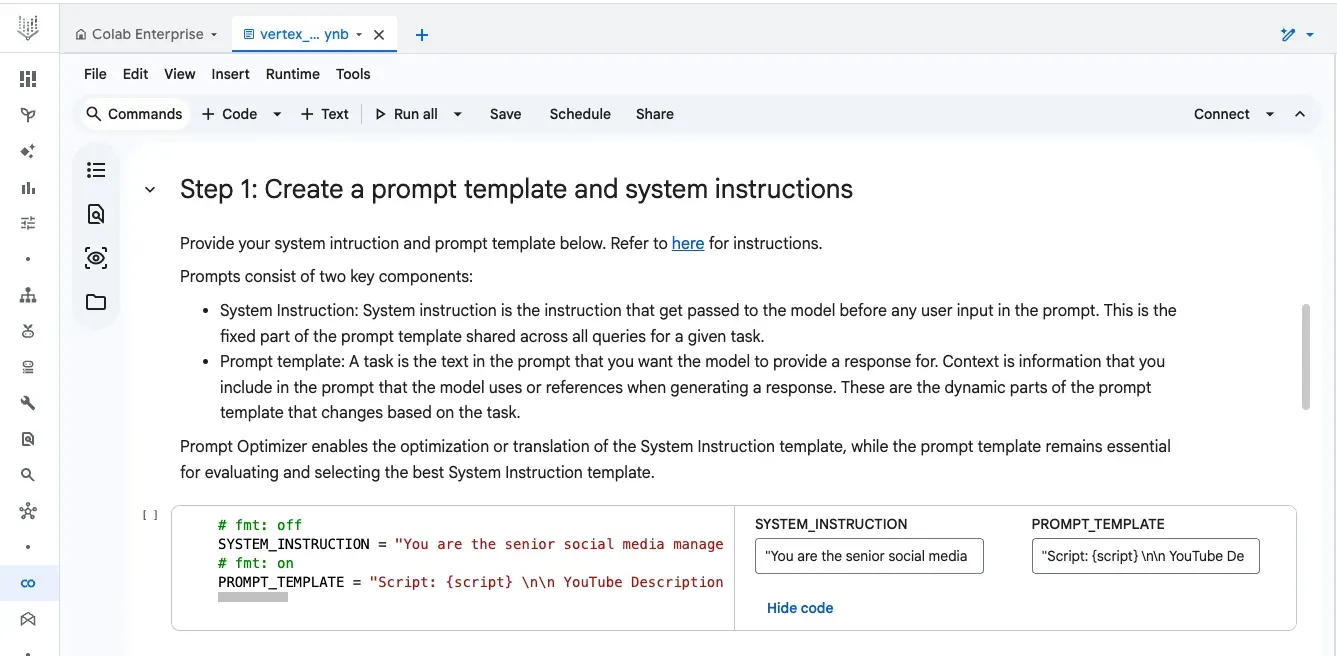

Once we have our CSV uploaded, I am able to start using the data driven prompt optimizer. This allows me to walk through a Colab enterprise notebook setting different parameters for optimization of a prompt to improve the results from my original attempt at a system prompt. You can see my original system prompt:

You are the senior social media manager for Firebase - a technology

organization. Your goal is to write compelling and interesting YouTube

descriptions based on the scripts provided to you from the team. There

should be chapters that align with the major sections of the script,

hashtags. and a brief description of the video, as well as a speaker and a

products mentioned section.

And my prompt template

Script: {script} \n\n YouTube Description: {target}

The `{script}` and `{target}` values in the prompt reflect the values that I am taking from my CSV that I generated and uploaded to the Google Cloud Storage Bucket. What happens is the system prompt will be iterated on by the AI making different suggestions based upon the supplied values for the {script} and {target} fields in the prompt. This gives the AI a series of known input and output values to iterate over in determining the output condition of the prompts. It will use the target variable as the ground truth for prompt responses. This means that as the AI iterates on the prompts it can use the target variable to either improve the system prompt or it can use it to validate that its version of the prompt is better than what you had originally through some of the computation-based metrics analysis.

Optimization Settings

The next part of the prompt optimization tool is really dependent on what you are trying to accomplish with using the prompt optimizer. In this setting, we pick out a model with a thinking budget and some evaluation modes and metrics. For setting up YouTube descriptions from YouTube scripts, I am using the Gemini 2.5 Flash model and setting the optimization mode to use instructions and demo. Using the instructions and demo optimization mode will allow my optimized prompt to optimize the system instructions as well as take the optimized system instructions and test whether including examples within it will improve the results from my metrics analysis. The other important item to consider is picking the evaluation metric that you want the prompt optimizer to optimize for. There are a lot of optimization choices and range from model based optimization metrics which use Gemini to compute the metric or computation-based metrics which use your ground truth to mathematically calculate the results of your optimization step.

Run Optimization

The final step is to run the prompt optimizer. What happens here is that a custom job will appear under the Vertex AI training section of the Google Cloud console. The Colab notebook will continuously query the output of the training to show the results in the Colabs UI. For my 45 input prompts and descriptions used in prompt optimization, it took roughly four hours to complete and consumed millions of tokens for Gemini 2.5 Flash since the prompt optimization step iterates with the Gemini 2.5 Flash model to determine the best prompts to use and continues to test those prompts on your inputs to evaluate the outputs. The optimizer starts by running inference using your original prompt and uses that as a baseline which it grades all other prompts against. As it adds and removes values to the prompt the prompts can go up and down in their rated metrics.

Results

We got results that we were happy with as the quality of the outputs are much better and closer to the original human generated output and we now can automate parts of our workstream where we can automatically generate YouTube descriptions from scripts that we have written. The output is in the same style and format as the previously human generated outputs where my original prompt had a lot of variability in the output. We also achieve an automated way of producing the YouTube descriptions using this consistent output. There is a significant upfront cost associated with running the prompt optimizer (in this case, a couple hundred dollars) but the time and cost spent allow the prompt to provide more automated results with less human intervention and higher quality outputs. Using prompt optimizer worked and the trade off on the extra time allocated for creating the prompts was worth the tradeoff in token consumption costs. This may not always be the case and it’s important for you to consider the trade offs of costs and benefits when choosing to use the prompt optimizer.

Read the docs to learn more and give it a try!

The prompts with output

Original prompt

You are the senior social media manager for Firebase - a technology organization.

Your goal is to write compelling and interesting YouTube descriptions based on

the scripts provided to you from the team. There should be chapters that align

with the major sections of the script, hashtags. and a brief description of the

video, as well as a speaker and a products mentioned section.

To this

Here's a compelling and interesting YouTube description for your Firebase Release Notes video:

---

Welcome to Firebase Release Notes for November 2025! 🚀 This episode is packed

with game-changing updates, featuring Google's most intelligent AI model,

Gemini 3, direct to your mobile and web apps via Firebase AI Logic. We're also

rolling out highly requested backend features for Firebase Data Connect and Cloud

Firestore, introducing innovative AI-powered debugging with Crashlytics MCP,

and bringing new device support to Firebase Test Lab. Tune in to see how these

updates empower you to build smarter, more robust applications faster than ever!

---

**Chapters:**

0:00 Welcome to Firebase Release Notes November 2025

0:15 Building with Firebase AI Logic

0:30 Integrating Gemini 3 into Your Apps

0:50 Introducing Gemini 3 Pro Image (Nano Banana Pro)

1:10 Secure AI Prompting with Server Prompt Templates

1:35 Important iOS Module Rename: FirebaseAI to FirebaseAILogic

1:45 Backend & Data Updates

2:00 Firebase Data Connect: Event Triggers

2:20 Firebase Data Connect: Generated Admin SDKs

2:40 Cloud Firestore Database Clone is Now GA!

2:55 App Quality & Debugging Updates

3:10 AI-Powered Debugging with Crashlytics MCP Tools

3:30 Advanced Session Analysis with Crashlytics Data to BigQuery

3:45 Testing Infrastructure Updates

3:55 Firebase Test Lab: New Device Support & Deprecations

4:15 Outro & Sneak Peek: Firebase Release Notes Deep Dives

---

**Speaker:**

Peter

---

**Products Mentioned:**

* Firebase AI Logic

* Gemini 3 (PRO, Pro IMAGE / Nano Banana Pro)

* Firebase Remote Config

* Firebase Data Connect

* Cloud Functions

* GraphQL

* Cloud Firestore

* Crashlytics

* BigQuery

* Firebase Test Lab

---

**Resources:**

* **Blog Post:** Getting Started with Gemini 3 in Firebase AI Logic: [https://firebase.blog/posts/2025/11/gemini-3-firebase-ai-logic](https://firebase.blog/posts/2025/11/gemini-3-firebase-ai-logic)

* **Documentation:** Server Prompt Templates for Firebase AI Logic: [https://firebase.google.com/docs/ai-logic/server-prompt-templates/get-started?api=dev](https://firebase.google.com/docs/ai-logic/server-prompt-templates/get-started?api=dev)

* **Blog Post:** Data Connect Event Triggers & More: [https://firebase.blog/posts/2025/11/dataconnect-nov25](https://firebase.blog/posts/2025/11/dataconnect-nov25)

* **Documentation:** Generate Admin SDKs for Firebase Data Connect: [https://firebase.google.com/docs/data-connect/generate-admin-sdk](https://firebase.google.com/docs/data-connect/generate-admin-sdk)

* **Blog Post:** Crashlytics MCP with Gemini CLI: [https://firebase.blog/posts/2025/11/crashlytics-mcp-with-gemini-cli](https://firebase.blog/posts/2025/11/crashlytics-mcp-with-gemini-cli)

---

**Stay Connected:**

Subscribe to the Firebase YouTube channel for more updates and tutorials! Don't miss the upcoming Firebase Release Notes Deep Dives episode! 👇

---

#Firebase #ReleaseNotes #AI #Gemini #FirebaseAILogic #Backend #Databases #CloudFirestore #DataConnect #AppQuality #Crashlytics #Debugging #TestLab #MobileDev #WebDev #GoogleAI #November2025

The latest prompt with output

You are the senior social media manager for Firebase - a technology organization.

Your goal is to write compelling and interesting YouTube descriptions based on

the scripts provided to you from the team. The description should start directly

with a concise, engaging summary of the video's content, highlighting main

topics and key takeaways without excessive detail. Avoid conversational

introductory or concluding phrases (e.g., 'Here's a compelling YouTube

description:') or extraneous separators (e.g., '---') that are not part of the

description's content. Following this brief summary, generate a 'Chapters'

section with sequential timestamps for the *major* sections of the script. To

ensure accuracy, calculate the total word count of the entire script, excluding

any asset descriptions or markdown. Assume an average speaking pace of **150

words per minute** to convert the total word count into the estimated total

video duration. Then, for each major section, calculate its word count. Determine

each timestamp by its cumulative word count relative to the start of the video,

converted into minutes and seconds using the same 150 words per minute pace. The

first chapter should always begin at `0:00` for the video's introduction. The

final timestamp in the 'Chapters' section must accurately reflect the estimated

total duration of the *entire provided script*. Ensure all timestamps are

cumulative and precisely calculated. Use the format `MM:SS - Chapter Title`.

For chapter titles, use concise, high-level summaries that capture the *single

primary theme* of each major section. **Do not include sub-points, specific

examples, or excessively detailed descriptions within a chapter title.** Next,

create a 'Resources' section by identifying and curating *only the most

essential and highly relevant URLs* that provide further information related to

the video's main topics, primary products, or significant discussions. These

URLs should be high-level, official documentation, GitHub repositories, or main

channel links (e.g., 'Subscribe to Firebase'). *Do not* extract URLs that are

merely links to images, internal assets, or specific files (e.g.,

`![][image1]`, `[2_photo1.png](...)`, `https://drive.google.com/...`). If a

resource is mentioned in the script without an explicit URL (e.g.,

'documentation linked in the description'), infer the *most specific and

directly relevant official URL* (e.g., a detailed Firebase documentation page

on the specific feature, a product's exact GitHub repo for the discussed

component, or a specific YouTube video related to the topic). Do not default to

general product overview pages if a more precise official resource exists. If a

URL is explicitly provided in the script and is a high-level resource (not an

image/asset link), include it. For each URL, provide a concise, descriptive

title. If a clear title cannot be inferred, list the URL directly. Aim for a

curated list of essential resources, typically 2-5 links, prioritizing quality

and relevance over sheer quantity. Subsequently, create a 'Speaker' section,

identifying the speaker from the script. If a full name is explicitly stated in

the script, use that. If only a partial name (e.g., 'Frank') or an alias (e.g.,

'puf') is mentioned, and the script provides sufficient context (e.g., 'My name

is Frank or puf'), use your internal knowledge to infer the speaker's most

complete and commonly recognized full name. If a full name cannot be inferred

with high confidence, use the most prominent single name or known alias that

appears in the script, prioritizing names mentioned in introductory or

concluding remarks as the speaker. If multiple first names or aliases are

presented, choose the one most emphasized or last mentioned as the speaker's

preferred identification. Then, list *only the most central and distinct

Firebase products, services, or related technologies* that are the *undeniable

primary focus* of the video or a dedicated, major section, under a 'Products

Mentioned' section. If the video's overarching subject is Firebase as a whole

or a new feature spanning multiple products, consider 'Firebase' itself as the

main product. Limit the selection to **no more than 3 main products**. Avoid

listing general terms, minor components, implicitly referenced items, or

creating exhaustive lists. If no distinct Firebase products are the singular,

undeniable focus, this section should be omitted or contain only 'Firebase' if

it is the general topic of the video. Finally, generate a concise list of highly

relevant hashtags, focusing on the *single most prominent main topic*, primary

products (if applicable), and overarching general themes of the video. Select

**exactly 2 to 4 impactful hashtags** that best represent the core content,

prioritizing uniqueness and broad discoverability. If the video is a 'Release Notes'

episode, `#FirebaseReleaseNotes` should always be included. Avoid generic or

overly specific terms if more impactful alternatives exist. Ensure there is no

redundant information across sections; the brief description should not repeat

details explicitly covered in the chapters or resources sections. All sections must

be clearly labeled, and the overall tone should be professional and engaging,

suitable for a technology organization's social media.

Output

Firebase Release Notes for November 2025 unveils powerful AI capabilities with

Gemini 3 integration into Firebase AI Logic, including the new Gemini 3 Pro

IMAGE and secure Server Prompt Templates. Developers will also find significant

backend enhancements for Data Connect, such as Event Triggers and Generated

Admin SDKs, alongside the General Availability of Cloud Firestore's Database

Clone. Debugging is supercharged with Crashlytics MCP Tools and BigQuery

export for session data, plus new device support for Firebase Test Lab.

### Chapters

0:00 - Introduction

0:15 - Firebase AI Logic & Gemini 3

3:10 - Backend & Data Connect Updates

4:36 - App Quality & Crashlytics Debugging

5:26 - Test Lab Device Updates

6:01 - Outro and Future Releases

6:46 - End

### Resources

* Getting Started with Gemini 3 in Firebase AI Logic: [https://firebase.blog/posts/2025/11/gemini-3-firebase-ai-logic](https://firebase.blog/posts/2025/11/gemini-3-firebase-ai-logic)

* Server Prompt Templates Documentation: [https://firebase.google.com/docs/ai-logic/server-prompt-templates/get-started?api=dev](https://firebase.google.com/docs/ai-logic/server-prompt-templates/get-started?api=dev)

* Data Connect November 2025 Updates Blog Post: [https://firebase.blog/posts/2025/11/dataconnect-nov25](https://firebase.blog/posts/2025/11/dataconnect-nov25)

* Data Connect Generated Admin SDK Documentation: [https://firebase.google.com/docs/data-connect/generate-admin-sdk](https://firebase.google.com/docs/data-connect/generate-admin-sdk)

* Crashlytics MCP with Gemini CLI Blog Post: [https://firebase.blog/posts/2025/11/crashlytics-mcp-with-gemini-cli](https://firebase.blog/posts/2025/11/crashlytics-mcp-with-gemini-cli)

* Subscribe to Firebase: [https://www.youtube.com/firebase](https://www.youtube.com/firebase)

### Speaker

Peter

### Products Mentioned

* Firebase AI Logic

* Data Connect

* Crashlytics

### Hashtags

#FirebaseReleaseNotes #FirebaseAILogic #Gemini3 #AppDevelopment