Many of you are already evaluating or using generative AI in your apps. By simply prompting the model, you can enable scenarios that were previously difficult or impossible to achieve.

Back in May 2024, we released the public preview of Vertex AI in Firebase – a new Firebase product with a suite of client SDKs that enables you to harness the capabilities of the Gemini family of models directly from your mobile and web apps. And all without the need to touch your backend, making it easy and straight-forward to integrate generative AI in your apps!

During these past months, thousands of projects have started using it, making millions of calls to the Vertex AI Gemini API via Firebase. Today, we are announcing the general availability of Vertex AI in Firebase! You can confidently use these Firebase SDKs to release your AI features into production, knowing they’re backed by Google Cloud and Vertex AI quality standards for security, privacy, scalability, reliability, and performance.

If you haven’t already, onboarding to use the Gemini API through Firebase takes just a few minutes with the help of the guided workflow in the Firebase console. To get started, select your Firebase project and visit the Build with Gemini page.

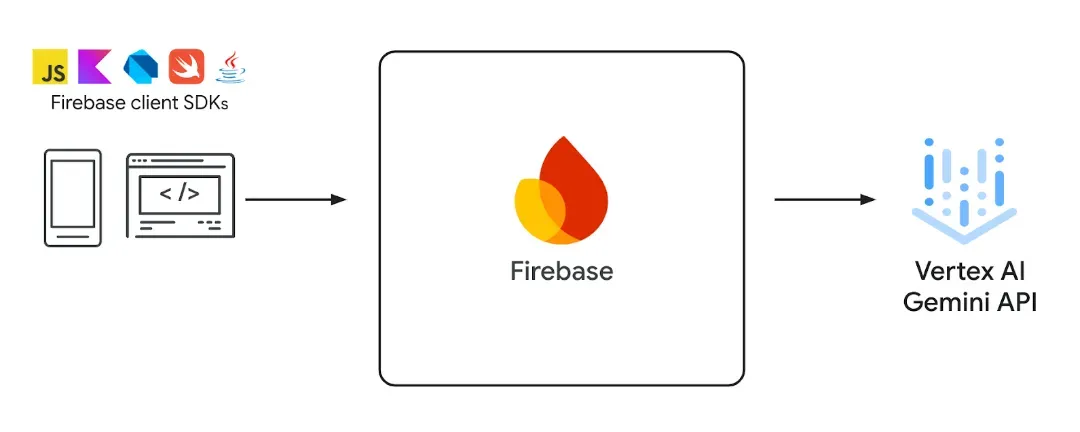

Access to the Vertex AI Gemini API from your client

Firebase serves as a bridge to the Vertex AI Gemini API, allowing you to use Gemini models directly from your app via Kotlin/Java, Swift, Dart, and JavaScript SDKs. With a few lines of code, you can start running inference with the model. In addition to prompting the latest multimodal Gemini models like Gemini 1.5 Flash and Gemini 1.5 Pro, the Firebase client SDKs allow you to use powerful features like system instructions, function calling, and generating structured output (like JSON).

Control output generation with JSON mode

Structured outputs in JSON format are critical for deep integration of the model into your application’s logic. You want to get consistent results, making sure the model always gives you JSON that matches your object types. We’ve added support for JSON schemas to ensure the model’s response is structured JSON output that matches your schema. This also means less cleanup work for you, as the data is already organized the way you need it.

import FirebaseVertexAI

// Provide a JSON schema for an array of animal-based character objects

let jsonSchema = Schema.array(

items: .object(

properties: [

"age": .integer(),

"name": .string(),

"species": .string(),

"accessory": .string(nullable: true)

]

)

)

// Initialize the Vertex AI service and the generative model

let model = VertexAI.vertexAI().generativeModel(

modelName: "gemini-1.5-flash",

// Set the 'responseMimeType' and pass the JSON schema object

generationConfig: GenerationConfig(

responseMIMEType: "application/json",

responseSchema: jsonSchema

)

)

let prompt = """

For use in a children's card game, generate 10 animal-based

characters with an age, name, species, and optional accessory.

"""

// Generate Content and process the result

if let text = try? await model.generateContent(prompt).text { print(text) }Access tools like external APIs and functions to generate responses

In essence, function calling allows the model to determine if it needs the output of a function you’ve defined in your app before generating its final response. You provide the model with function definitions from your app (for example, getRestaurantsCloseTo(famousPlace: String): List<Restaurant>. When prompted with something like “list restaurants close to Madrid’s city hall,” the model might ask your app to execute getRestaurantsCloseTo("Puerta Del Sol") before generating the response with the list of restaurants.

Here’s an in-depth example for how to set up and use function calling in an app:

enum class AudioReaction(val soundUri: Int) {

CHEER(RingtoneManager.TYPE_NOTIFICATION),

SADTROMBONE(RingtoneManager.TYPE_ALARM)

}

// Write a function in your app

private fun playSound(context: Context, reaction: AudioReaction) {

RingtoneManager

.getRingtone(context, RingtoneManager.getDefaultUri(reaction.soundUri))

.play()

return JsonObject(mapOf("result" to JsonPrimitive("Sound Played")))

}

// Describe the function with both schema and descriptions.

val playSoundTool = FunctionDeclaration(

"playSound",

description = "Plays a sound based on the desired audio reaction passed in.",

parameters = mapOf(

"reaction" to Schema.enumeration(listOf("CHEER", "SADTROMBONE"),

description = "Type of reaction sound to play.")

),

)

// Initialize the Vertex AI generative model and chat session

val chat = Firebase.vertexAI.generativeModel(

modelName = "gemini-1.5-flash",

// Provide the function declaration to the model.

tools = listOf(Tool.functionDeclarations(listOf(playSoundTool)))

).startChat()

// Send the user's question (the prompt) to the model using multi-turn chat.

val result = chat.sendMessage("Play a sound to celebrate the GA launch")

// When the model responds with one or more function calls, invoke the function(s).

val playSoundFunctionCall = result.functionCalls.find { it.name == "playSound" }

// Forward structured input data for the function that was prepared by the model.

playSoundFunctionCall?.let {

val reactionString = it.args["reaction"]!!.jsonPrimitive.content

val reaction = AudioReaction.valueOf(reactionString)

val functionResponse = playSound(context, reaction)

// Send the response(s) from the function back to the model

// so that the model can use it to generate its final response.

val finalResponse = chat.sendMessage(content("function") {

part(FunctionResponsePart("playSound", functionResponse))

})

// Log the text response.

Log.d(TAG, finalResponse.text ?: "No text in response")

}It’s also common to define multiple functions for the model to call, and sometimes the model needs the output of more than one function to craft its response. So, Vertex AI in Firebase SDKs supports parallel function calling, where the model requests all the necessary functions in a single response.

Suitable for every developer

Vertex AI in Firebase is also now governed by the Google Cloud Platform (GCP) Terms of Service, making it ready for production-use by everyone, whether you’re an independent developer, work at a small company, or are part of an enterprise-scale corporation.

Complying to Google Cloud ToS provides a framework for data security, privacy, and service level agreements, giving you confidence in building and deploying AI-powered applications. Also, you can leverage the scalability of Google Cloud and Vertex AI to handle growing demands and ensure high performance for your AI features.

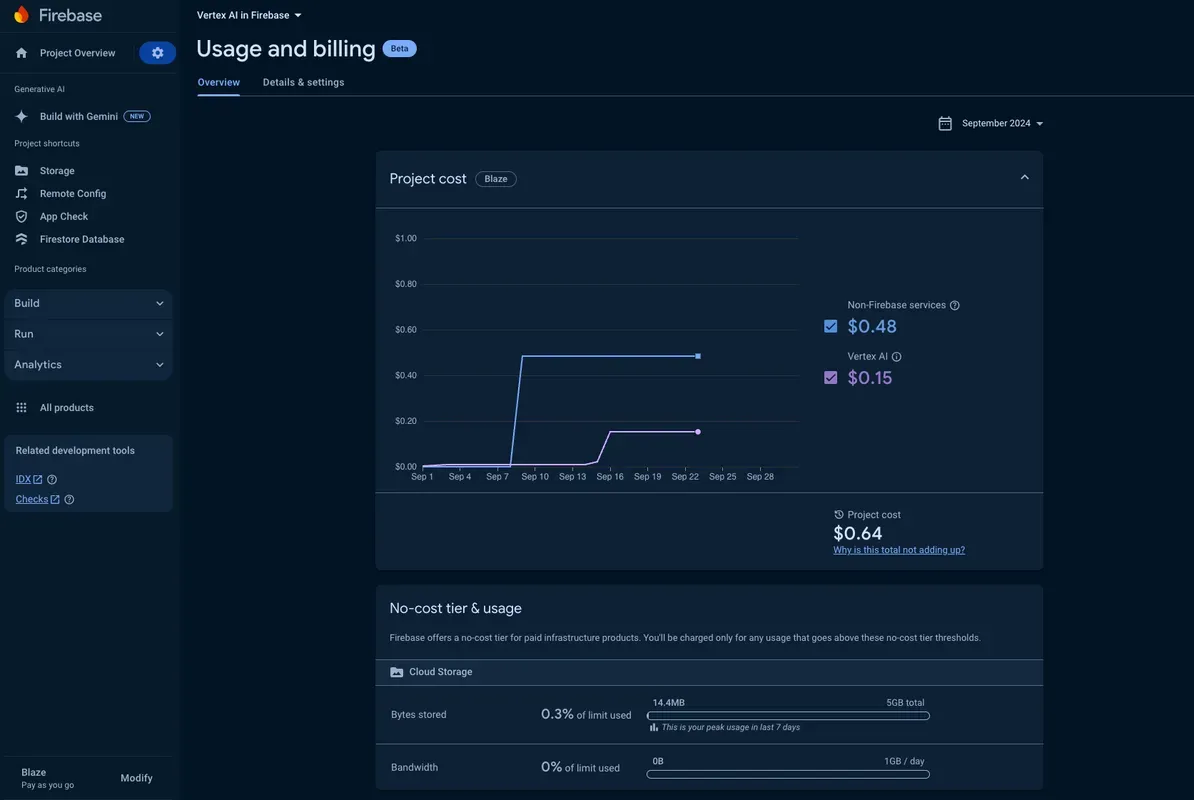

Stay on top of your costs

Once you start calling the Gemini API (especially in your production apps), we want to help you keep track of your spending. Check out the Usage and Billing dashboard in the Firebase console to set up billing alerts and view your project’s costs.

Get started now!

If you’re already familiar with Vertex AI in Firebase and have a project using it – firstly, thank you for your early adoption! Secondly, visit the migration guide to learn about important updates and the changes needed to upgrade to use the GA-version of Vertex AI in Firebase.

If you’re new and want to use the Vertex AI in Firebase client SDKs to call the Vertex AI Gemini API directly from your app—they’re available for Swift, Android, Web, and Flutter—welcome! Check out our quickstarts and read our documentation.

These client libraries offer important integrations with other Firebase services that we strongly recommend for production apps:

- Firebase App Check: Help secure the Gemini API against unauthorized clients and protect your assets.

- Firebase Remote Config: Dynamically and conditionally control your prompts, as well as model names, versions, and configurations—all without releasing a new version of your app.

- Cloud Storage for Firebase: Send large image, video, audio, and PDF files in your multimodal requests.

Your feedback is invaluable. Please request features, report bugs, or contribute code directly to our Firebase SDKs’ repositories. We also encourage you to participate in Firebase’s UserVoice to share your ideas and vote on existing ones.

We can’t wait to see what you build with Vertex AI in Firebase!

Happy coding!