We’re excited to announce updates for further integration of Firebase and Vertex AI, focused on providing you with more direct and effective tools to build compelling AI-driven features directly into your mobile and web apps. These updates provide access to powerful, newer models, introduce APIs tailored for interactive use cases, and expand platform support.

Experience natural conversations with Gemini’s Live API

You can now use Gemini’s Live API in Firebase to simplify building sophisticated conversational app experiences. These APIs enable streaming audio or text input to the model and receiving streaming audio and text responses, making it possible to create natural, interactive dialogues. This lets you build real-time voice interactions, including handling interruptions and fluid back-and-forth exchanges. Firebase manages the underlying technical complexities of this two-way communication, freeing you to focus on crafting intuitive user experiences for your app.

The Android and Flutter public preview SDKs are now available. Support for additional platforms is coming soon.

Here’s a glimpse of how you can use it in Kotlin (Android):

// Initialize the Vertex AI service and create a `LiveModel` instance

// Specify a Gemini model that supports your use case

val model = Firebase.vertexAI.liveModel(

modelName = "gemini-2.0-flash-exp",

// Configure the model to respond with audio during this session

generationConfig = liveGenerationConfig {

speechConfig = SpeechConfig(voice = Voices.CHARON)

responseModality = ResponseModality.AUDIO

}

)

val session = model.connect()

session.startAudioConversation()

Expand your reach with React Native support

As we are dedicated to supporting developers across all major platforms, we’re thrilled to announce the expansion of Vertex AI in Firebase to include React Native, one of the most popular cross-platform app development frameworks. You can now effortlessly securely and directly incorporate Gemini APIs into your React Native applications, broadening the reach of AI-powered innovation to even more users and devices.

Here’s an example using the @react-native-firebase/vertexai module to generate text:

import React from 'react';

import { AppRegistry, Button, Text, View } from 'react-native';

import { getApp } from '@react-native-firebase/app';

import { getVertexAI, getGenerativeModel } from '@react-native-firebase/vertexai';

function App() {

return (

<View>

<Button

title="Write Poem"

onPress={async () => {

const app = getApp();

const vertexai = getVertexAI(app);

const model = getGenerativeModel(vertexai, { model: 'gemini-2.0-flash' });

const result = await model.generateContent('Write a poem about a React Native developer');

console.log(result.response.text());

}}

/>

</View>

);

}

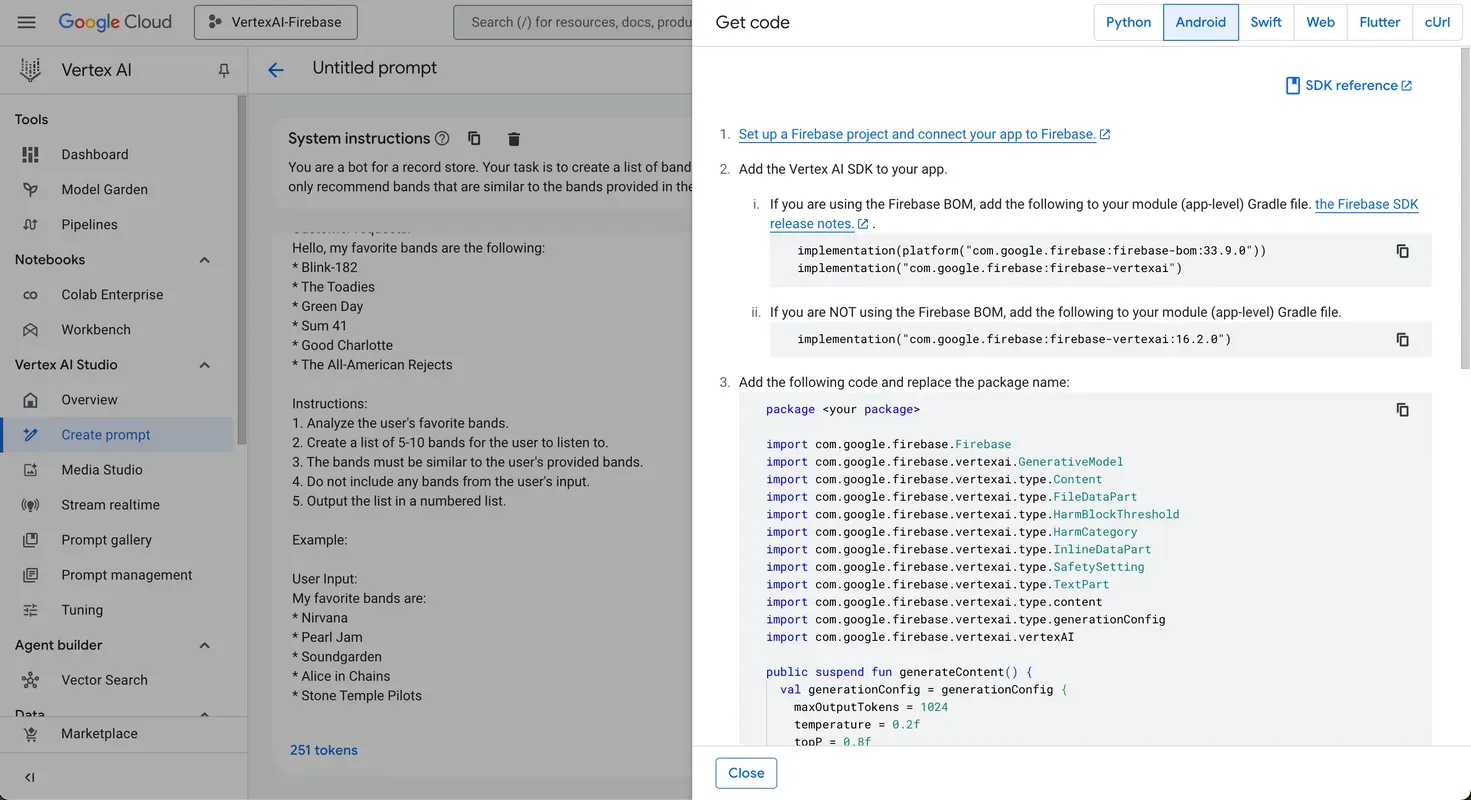

Get Firebase SDK code snippets from Vertex AI Studio

To speed up your ideation and testing processes, you can now get code snippets for the Firebase SDK within Vertex AI Studio. Now, while you’re experimenting or refining your prompts in the Vertex AI Studio, you can instantly get the necessary setup and initialization code for your target platform, including Android, Swift, Web, and Flutter.

Get started and build what’s next

These updates bring powerful new ways to integrate Vertex AI directly into your Firebase applications, providing practical building blocks for creating richer, AI-powered features securely from your client-side code, managed through the Firebase platform you already use. Here are some resources to get you started:

- Live API documentation for Android and Flutter

- React Native Vertex AI module guide

- Vertex AI in Firebase documentation

- Firebase SDK Code Snippets in Vertex AI Studio

Let us know what you think about these new capabilities and share your creations with the Firebase community. We’re excited to see what you build!