At Firebase, we want to help app developers seize the prevailing AI moment and gain a competitive edge in the market. At Google I/O, we announced many new features that help you integrate AI into your Firebase development workflow. In this blog, we dive into details about Gemini in Firebase, an AI assistant that gives you instant access to the Gemini model from the Firebase console.

Gemini in Firebase delivers AI-assistive capabilities to help you build applications with higher velocity and quality. It uses a customized version of the Gemini model, pre-trained with extensive Firebase expertise to provide specialized and targeted assistance for Firebase developers. Built on Gemini for Google Cloud, Gemini in Firebase enables organizations to adopt AI assistance at scale while meeting security, privacy, and compliance requirements.

How it works

Gemini in Firebase is integrated within Firebase console workflows and can quickly answer your questions about Firebase features, generate code snippets, and offer troubleshooting support to improve app quality.

Gemini is also integrated with Firebase Crashlytics to provide AI-powered issue analysis. AI assistance in Crashlytics can generate a cohesive crash summary, sample code, recommended next steps, and industry best practices in context (and in seconds!), kickstarting your debugging journey and expediting your triage process. To learn more, check out Solve issues faster with AI Insights in Crashlytics.

Examples of prompts you can use

- General inquiries about Firebase product capabilities

- Does Firebase provide a feature flagging capability?

- Ask general questions about Firebase project management and getting started:

- How do I remove a member from a Firebase project?

- I’m building an app with Flutter and Firebase. Do I register both my iOS target and Android target in the same Firebase project?

- Troubleshooting with natural language:

- I’m trying to upload an image for an in-app message I’m sending, but when I do, the screen just loads and loads without ever moving forward. I’ve tried to do this with multiple images on multiple browsers.

- What does the “no-auth-event” error code mean?

- Generate code:

- Show me how to add Firebase App Check to my iOS app

- Generate a Dart code snippet to upload a file to Firebase Cloud Storage. Here are the specific requirements: 1. User-Specific Storage: - Ensure the uploaded file is stored in a path unique to the currently authenticated Firebase user. 2. File Size Limit: - Before initiating the upload, check if the file size is less than 5 MB.

- Explain code:

- Explain the code step by step (paste a code snippet)

- Generate tests:

- Generate a unit test in the same language for the following code snippet. Ensure that the test thoroughly covers the functionality of the provided code. Include relevant test cases and assertions to validate its behavior. (paste a code snippet)

- Suggest improvements to code:

- Make my code readable (paste a code snippet)

- Help me improve my code snippet to reduce nesting, here are the specific requirements (1) First understand the high-level idea of the code snippet; (2) Then improve this code while preserving its functionality based on the requirement that I provide; (3) Then explain how you improved the code after the improved code block; (paste a code snippet)

The “Making of” Gemini in Firebase

Since our announcement at Firebase Demo Day in October 2023, we received significant interest from developers asking to join our private preview and received many questions about how we built the service. We’d like to share some behind-the-scenes information around the journey of how we built Gemini in Firebase, especially around how we augment Google’s foundational Gemini models with Firebase knowledge and support use cases specific to app development, like crash insights with Firebase Crashlytics.

Model tuning

We harnessed the power of Google Cloud Vertex AI to build a supervised fine-tuning of Gemini using LoRA. This allows us to tailor the broad power of Gemini to be more relevant to Firebase and the problem spaces our users are most interested in.

We leveraged Firebase’s extensive documentation, a comprehensive collection of Firebase knowledge curated and published over the years by members across our team. Additionally, the Firebase Community Support team has built up internal playbooks over the last seven years: these contain a wealth of Firebase troubleshooting information across all Firebase products. We converted these to prompt and response pairs, and enlisted the entire Firebase Engineering, Developer Relations, and Support teams to crowdsource the creation of over hundreds of prompts from across all Firebase products.

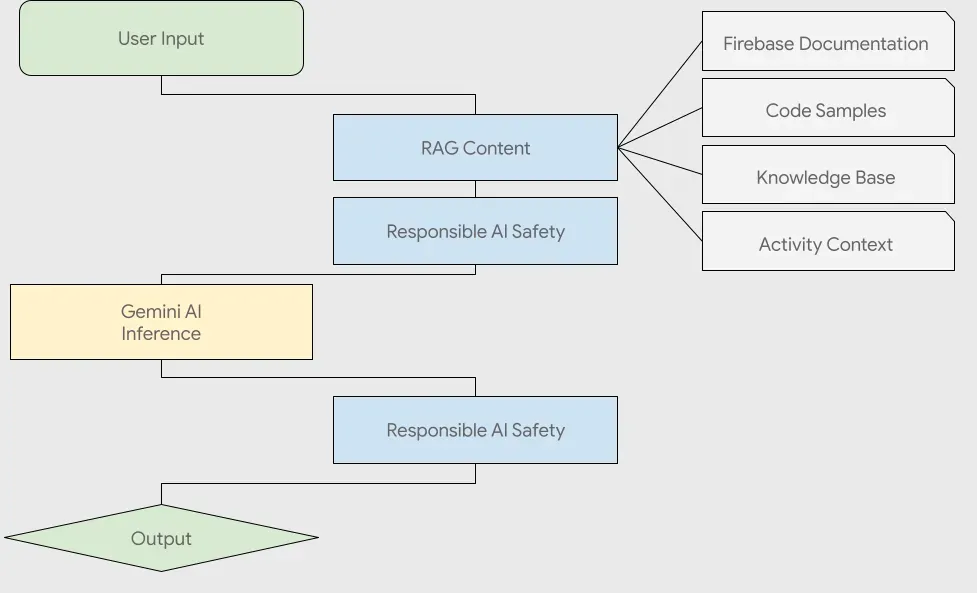

We took all of these assets and stored them in a vector database and used them for Retrieval Augmented Generation (RAG) to strengthen and ground our LLM-generated answers. This provides more up-to-date information to the model without needing expensive model training cycles. Lastly, we use Google Responsible AI service to validate answers from the model and ensure content safety.

Model evaluation

To effectively evaluate the model, we used traditional and non-traditional methods to ensure that the model responds predictably with accuracy and relevancy. We built an evaluation set of “golden prompts,” composed of a corpus of prompts with ideal responses for different user experiences where we expect the model to be used. This golden set is built with our knowledge and experience across so many years with so many people from different parts of Firebase coming together. The golden set is optimized on quality more than quantity: We have a few hundred prompt-response pairs in this set that provide high quality responses for each prompt.

This golden evaluation set is used on a periodic basis to compare ideal responses for consistency and constant improvement across different model and tuning iterations. We built custom dashboards that track these important metrics across evaluation iterations. As the prompts evolve, this golden set gets updated to make sure they stay accurate. We are working on prompt templates that can make this process easier and scalable. This evaluation framework enables us to not just evaluate prompts, but also aids in “Prompt Tuning” to get the ideal prompts that can provide the most accurate responses for the users.

We also employed some novel techniques for non-automated (human) evaluations. The Firebase Developer Support team extensively tested the Gemini in Firebase with common customer questions, building a knowledge base of hundreds of additional prompts that we use for spot-checks and can be added as future candidates for the golden prompt corpus going forward.

Additionally, we built AI Assistance in Crashlytics by adopting different prompting techniques like “Zero-Shot” and “Chain of thought” and running our evaluations across a variety of issues and prompts. We also leveraged the power of Android documentation and our past issue insights by including them in a RAG data corpus to embellish the quality of our responses, ensuring that they are insightful and actionable. Using rubrics like “relevancy” (a measure of how relevant a model’s response is to the domain and context) and “consistency” (how well a model provides responses that are factually consistent with ground truth), we can determine the quality of the responses and, based on these metrics, can perform further prompt and/or model tuning to improve response quality.

Give it try!

Gemini in Firebase is now generally available and you can access it directly in the Firebase Console. We invite you to try it out and provide feedback. During the promotional period, which ends July 30, 2024, you won’t be charged for Gemini in Firebase usage. For more information, see Firebase pricing plans.